Challenge Significance

As deepfake technology advances rapidly, fake images and audio-visual content threaten social security and media credibility. The Deepfake Detection and Localization Challenge (DDL Challenge) aims to:

- Enhance detection interpretability by providing intuitive evidence through temporal-spatial localization (e.g., pixel-level tampered areas, forged timestamps).

- Address multi-modal risks by tackling complex attacks such as "forged audio + authentic video," filling the existing technical gaps.

- Promote technology inclusivity by providing access to the world's largest multi-modal deepfake dataset (1.8M+ samples), which encompasses 88 forgery techniques.

Competition Rules and Incentives

1. Participation Guidelines

a) Model Submission Requirements

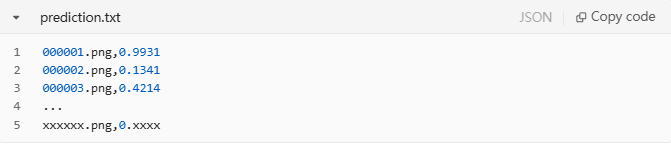

- Each track permits only one model submission that must simultaneously address both classification and localization tasks.

- All models must utilize open-source pre-trained architectures. Teams developing proprietary models during the competition are required to publicly release their model specifications and training protocols under open-source licenses (e.g., MIT, Apache 2.0) during the competition period.

- Winning solutions must open-source their full implementation, including:

- Training pipelines and hyperparameter configurations

- Evaluation code with reproducibility documentation

- Final model weights in standard formats

- Violations of these rules will result in disqualification. The organizing committee reserves final authority over all competition-related matters.

- Extended samples generated by data augmentation/deepfake tools based on the released training set can be used for training, but these tools need to be submitted for reproduction.

2. Awards and Recognition

- Monetary prizes: Substantial monetary awards will be granted to top-performing teams across both tracks.

- Academic recognition: Exceptional solutions will be invited for presentation at the IJCAI.

Challenge Content

This challenge consists of two tracks focusing on detection and localization of deepfake artifacts:

Track 1: Image Detection and Localization (DDL-I)

- Tasks: Real/Fake Classification (Cla) + Spatial Localization (SL).

- Dataset: Over 1.5 million images covering 61 manipulation techniques, including single-face and multi-face tampering scenarios.

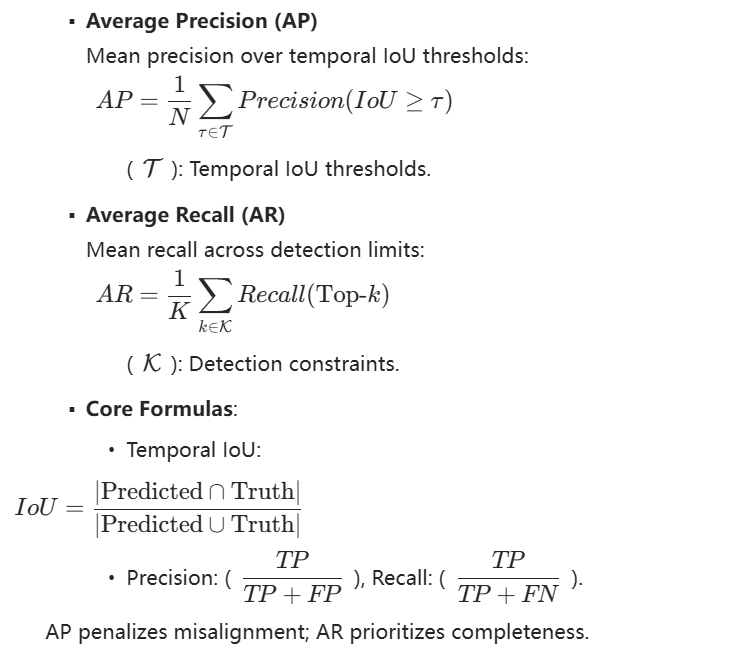

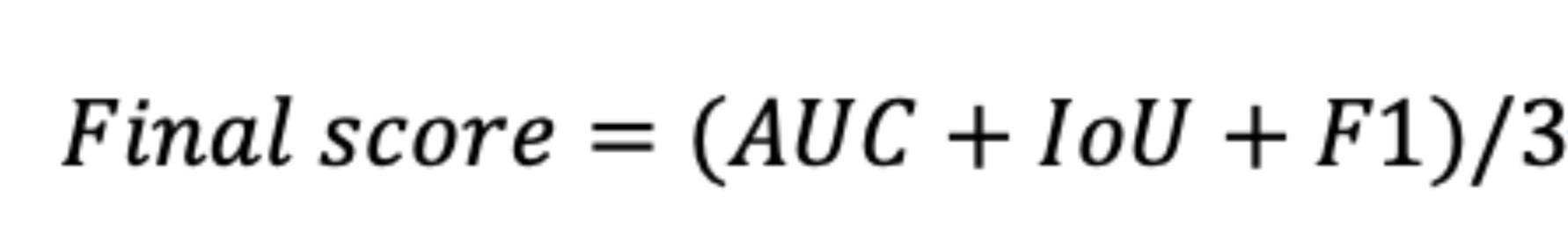

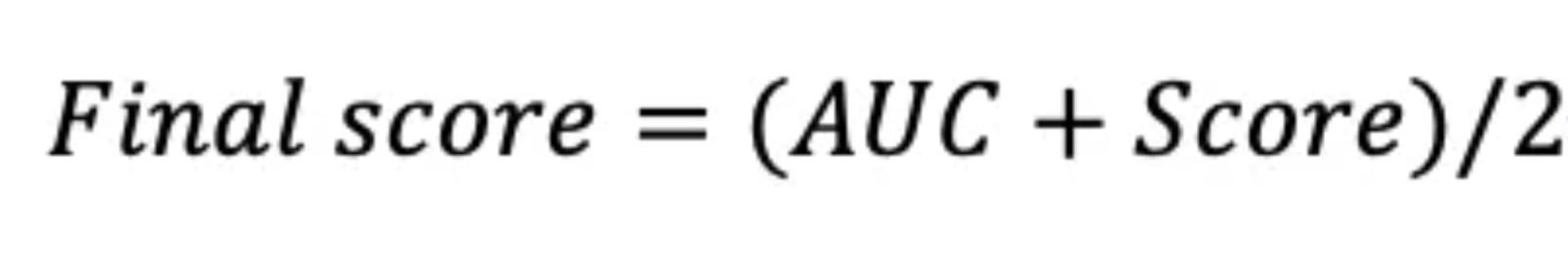

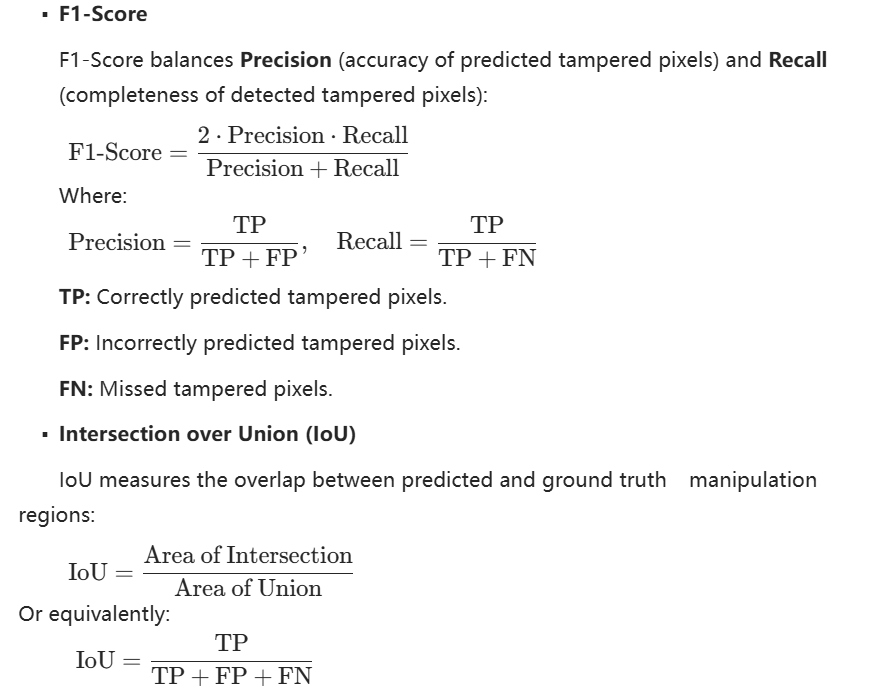

- Evaluation Metrics: Area Under the ROC Curve (AUC) for detection, F1 Score, and Intersection over Union (IoU) for spatial localization (calculated exclusively for fake samples).

Track 2: Audio-Visual Detection and Localization (DDL-AV)

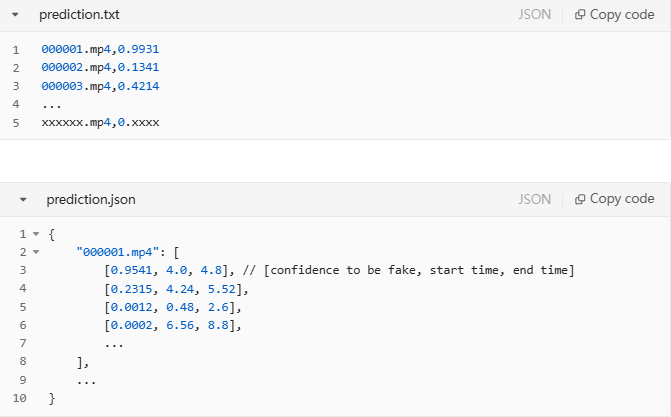

- Tasks: Real/Fake Classification (Cla) + Temporal Localization (TL).

- Dataset: 300,000+ samples integrating 9 audio manipulation methods and 18 video forgery techniques.

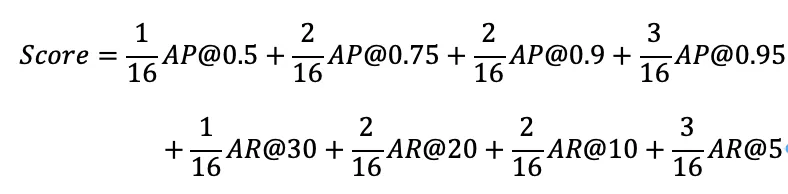

- Evaluation Metrics: Area Under the ROC Curve (AUC) for detection, Average Precision (AP), and Average Recall (AR) for temporal localization (calculated exclusively for fake samples).